What could the data analytics function look like if it adopted a product mindset, similar to how the data engineering function has evolved to treat data pipelines as real products to be developed and nurtured?

Today, analytics is primarily an internal “service” function performed by analysts, centralized or embedded within business domains. These analysts toil on top of data assets, writing and re-writing queries, often overlapping and repetitive in logic to answer a never-ending list of questions from insights-hungry business domains.

Efforts to establish true "self-serve" capabilities have proven challenging or elusive either due to

(i) the low level at which input data is modeled requiring a fair amount of expert transformations to make it useful, or on the other end,

(ii) the creation of pre-aggregated, pre-computed reports that are too high level to appropriately or conveniently drill down or across the business domains of interest.

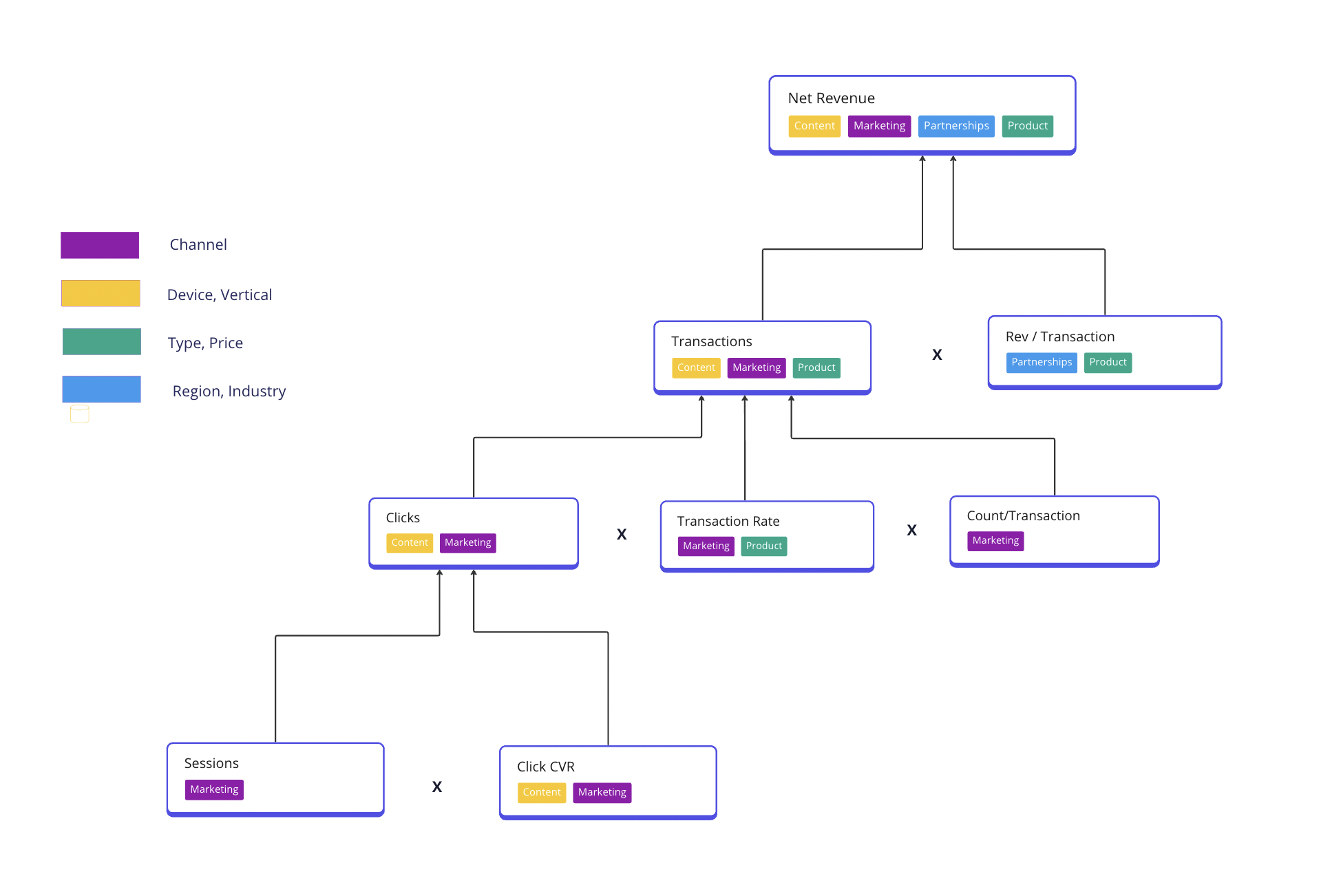

Even if a powerful “ask and answer” layer existed to traverse these data models more easily, nuanced controlled calculations are an essential part of proper analysis workflows. This involves dis-aggregating metrics into component drivers, decomposing metrics into related sub-metrics, computing cross-tabs, recognizing confounders variables, and more.

Today, this workflow must be crafted query by query by analysts juggling between an endless request queue of urgent and high priority analyses. There never seems to be enough analysts or analysis, or insights, regardless of the size of the data organization.

The table above envisions how a product mindset could radically shift the dynamics of the analytics function:

- shifting away from operating on datasets to the building blocks of metrics, entities and segments,

- empowering analysts to construct metric trees that model the business processes of interest,

- leveraging the rich business metadata to automate analysis workflows such as root cause analysis, experimentation or feature impact assessment, scenario planning, and more.

- creating templates for both the suite of algorithms and the metrics they operate on, across various business models and domains.

- eventually freeing up analysts to work on the most complicated class of analysis problems like inferring causality

Such a shift could unlock significant time savings and productivity gains, not only within organizations but also industry-wide, akin to the revolutions seen in software development or more recently, in data pipeline construction.

Moreover, it would maximize the meaningful utilization of the data assets painstakingly generated by the data engineering function through these data pipelines.

We can already see glimpses of this transformation. Data platform teams are establishing internal metrics repositories, automating the calculation and compilation of executive-level KPIs for business reviews, and streamlining output metrics from experiments. The introduction of DBT's Metrics Layer is another step in the right direction.

These are the sparks that indicate the future. I'm excited to witness all of this evolve into a full-fledged revolution.